Sensor Fusion Technology Will Lead to Safer Autonomous Vehicles

As the race toward truly autonomous vehicles continues, and timelines for their arrival keep changing as new obstacles are discovered, one key debate in the industry revolves around which technologies will be the most successful at bringing AVs to the level of safety that will make the public comfortable with using them.

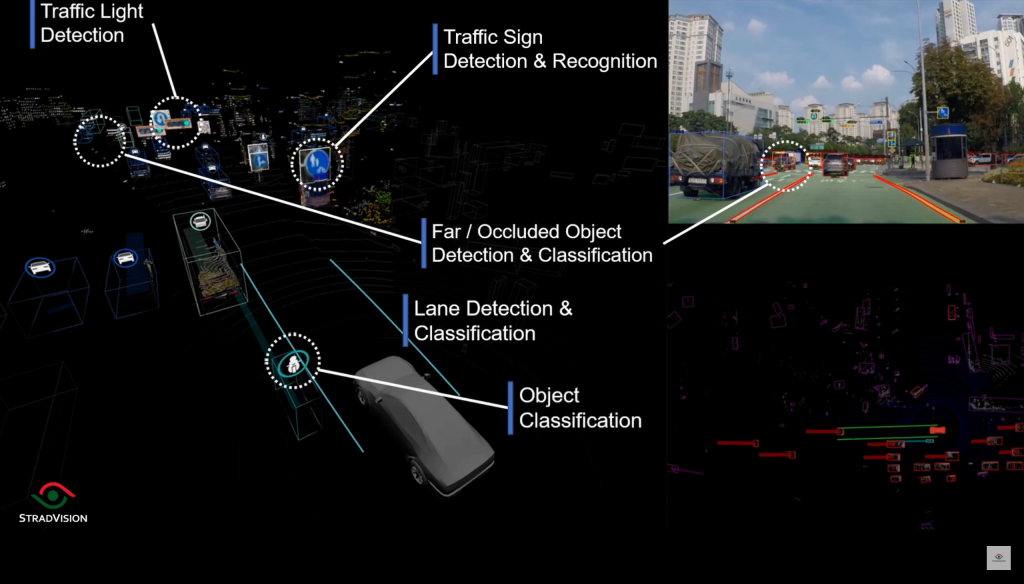

The reality is that it won’t be just one technology, as the vehicles of the future will have many key technologies. One approach already being explored by key automotive industry players is sensor fusion, which combines inputs from a variety of sensors, forming a single, more accurate image of the environment around a vehicle.

Why Sensor Fusion?

Think of it in human terms.

We perceive our surroundings through various sensory organs in our bodies. Although vision is the dominant sensor of daily life, the information acquired with this single sensor is extremely limited. Therefore, we must complete the information of our surroundings by using other sensors — including hearing and smell.

This concept is applicable to AVs. Currently, sensors used in AVs and advanced driver assistance system (ADAS) technology range from cameras to lidars, radars, and sonars. These sensors each have their own advantages, but each also have limited capabilities.

In the automotive field, which is so essential to allowing people to live their daily lives, safety is the most important value. This truth especially applies to AVs, where every mistake could lead to further distrust from the public about AVs. So the ultimate value that most companies aim for through autonomous driving technology is the realization of a safer driving environment.

With sensor fusion technology, each sensor is specialized in acquiring and analyzing specific information. Therefore, advantages and disadvantages are clear in recognizing the surrounding environment, and there are also limitations that are difficult to solve.

Cameras are very effective in classifying vehicles and pedestrians, or reading road signs and traffic lights. However, their abilities may be limited by fog, dust, darkness, snow, and rain. Radar and lidar accurately detect the position and velocity of an object, but they lack the ability to classify objects in detail. They also can not recognize various road signs because they are incapable of classifying colors.

Sensor Fusion technology eliminates distortion or lack of data by integrating various types of data acquired. The Sensor Fusion software algorithm complements information about blind spots that a single sensor cannot detect, or integrates overlapping data detected by multiple sensors simultaneously and balances information. With this comprehensive information, this technology provides the most accurate and reliable environmental modeling and enables more intelligent driving.

Camera plus lidar

Sensor Fusion is one of the essential technologies to achieve the ultimate value of a safe driving environment pursued by the automotive industry. Then, among the various sensors, why is the industry continuing to pay attention to the combination of camera and lidar?

The answer lies in the completeness of the information that can be obtained when data from the camera and lidar are integrated. Sensor Fusion between the camera and lidar produces intuitive and effective results, as if creating 3D graphics on a computer. Lidar grasps the 3D shape of an object and synchronizes it with the precise 2D image information of the camera to accurately implement the surrounding environment, as if placing a texture on a 3D object.

Bringing autonomous vehicles to our daily lives

Sensor Fusion is attracting attention as an effective solution for implementing more precise autonomous driving technology, but challenges remain. To bring autonomous driving vehicles into our daily lives, they must have technical reliability that perfectly guarantees human safety, and versatility that can be applied to a variety of vehicle types.

As described above, as the number of sensors in the vehicle increases, the accuracy, and reliability of the recognition of the surrounding environment increases. On the other hand, as the amount of data to be technically processed increases, expensive hardware with higher computing power is required.

One solution is to make object recognition software lighter and more efficient. To achieve these goals, StradVision is focusing on making the object recognition software lighter and more efficient so that AI-based object recognition is possible even on embedded platforms. SVNet exhibits the same ability even at 50% of the calculation speed compared to its competitors in the industry and provides competitive performance on a platform with a lower performance by one or two stages.

This reduces costs and provides a hardware margin to implement more advanced functions such as Sensor Fusion, which will play an essential role as the industry works to deliver AVs and more advanced ADAS systems to the masses.